How accurate are reports?

One of the big topics of discussion in various deliverability circles is the problems many places are seeing with delivery to Microsoft properties. One of the challenges is that Microsoft seems to be happy with how their filters are working, while senders are seeing vastly different data. I started thinking about reporting, how we generate reports and how do we know the reports are correct.

Everyone I know has bodged a SQL query at some point or another. I shared one of my scripts with Steve just recently and he pointed out that I left out a % so that one line wasn’t going to match. When I’m creating scripts, I check and compare them with manual queries and making sure the the right number of records are updated. But, apparently I missed this one query. What it does mean is all my reporting will be wrong. Now, in this case, it’s not a huge deal. The domain in question belonged to a free email provider acquired back in 2016 and who may or may not actually provide email services any longer.

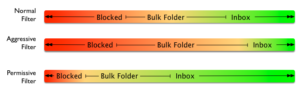

Microsoft has its own history of problematic reporting in their SNDS product. They provide clear “red, yellow, green” coding of mail. According to their documentation red is definitely spam, green is definitely not spam and yellow is the 80% in the middle. Makes total sense and sounds awesome. The problem is that the colors seem to have no correlation to how mail is delivered. I’ve had clients with solid red and great inbox delivery and solid green and all their mail goes to spam. The reporting doesn’t match the behaviour. In fact, my go to answer for SNDS color questions is “the colors are a lie.”

Thing is, the colors have been a lie for as long as I’ve been using SNDS. I’ve told MS folks the colors are a lie. I’ve filed reports about the colors not accurately reflecting delivery. Most of the time the MS employees simply agree with me.

All of which led me to the place that maybe some of the problem with Microsoft is that some of their internal reporting is wrong. That what they’re seeing isn’t accurately reflecting what’s happening with delivery. Maybe someone bodged a SQL query but there’s no incentive to go back and check all the queries. When you’re monitoring delivery and filtering, there has to be reporting, there’s just too much information to go through it by hand.

The part about Microsoft is all rampant speculation on my part. But there is a lesson here for anyone working in “big data” – and these days email marketing and deliverability is big data. Regularly run your reports against a known data set, make sure it’s reporting what you think it is and that it’s giving you accurate information. Inaccurate reports are unactionable but unless you check you’d never know if your reporting was broken.