The data are what they are

I’ve had a lot less opportunity to blog at the recent M3AAWG conference than I expected. Some of it because of the great content and conversations. Another piece has to do with lack of time and focus to edit and refine a longer post prompted by the conference. The final issue is the confidential nature of what we talk about.

With that being said, I can talk about a discussion I had with different folks over the looking at A/B testing blog post from Mailchimp. The whole post is worth a quick read, but the short version is when you’re doing A/B testing, design the test so you’re testing the relevant outcomes. If you are looking for the best whatever to get engagement, then your outcome should be engagement. If you’re looking for the best thing to improve revenue, then test for revenue.

Of course, this makes perfect sense. If you do a test, the test should measure the outcome you want. Using a test that looks at engagement and hoping that translates to revenue is no better than just picking one option at random.

That particular blog post garnered a round of discussion in another forum where folks disagreed with the data. To listen to the posters, the data had to be wrong because it doesn’t conform to “common wisdom.” The fact that data doesn’t conform to common wisdom doesn’t make that data wrong. The data is the data. It may not answer the question the researcher thought they were asking. It may not conform to common wisdom. But barring fraud or massive collection error, the data are always that. I give Mailchimp the benefit of the doubt when it comes to how they collect data as I know they have a number of data scientists on staff. I’ve also talked with various employees about digging into their data.

At the same time the online discussion of the Mailchimp data was happening, there was a similar discussion happening at the conference. A group of researchers got together to ask a question. They did their literature review, they stated their hypothesis, they designed the tests, they ran the tests. Unfortunately, despite this all being done well, the data showed that their test condition had no effect. The data were negative. They asked the question a different way, still negative. They asked a third way and still saw no difference between the controls and the test.

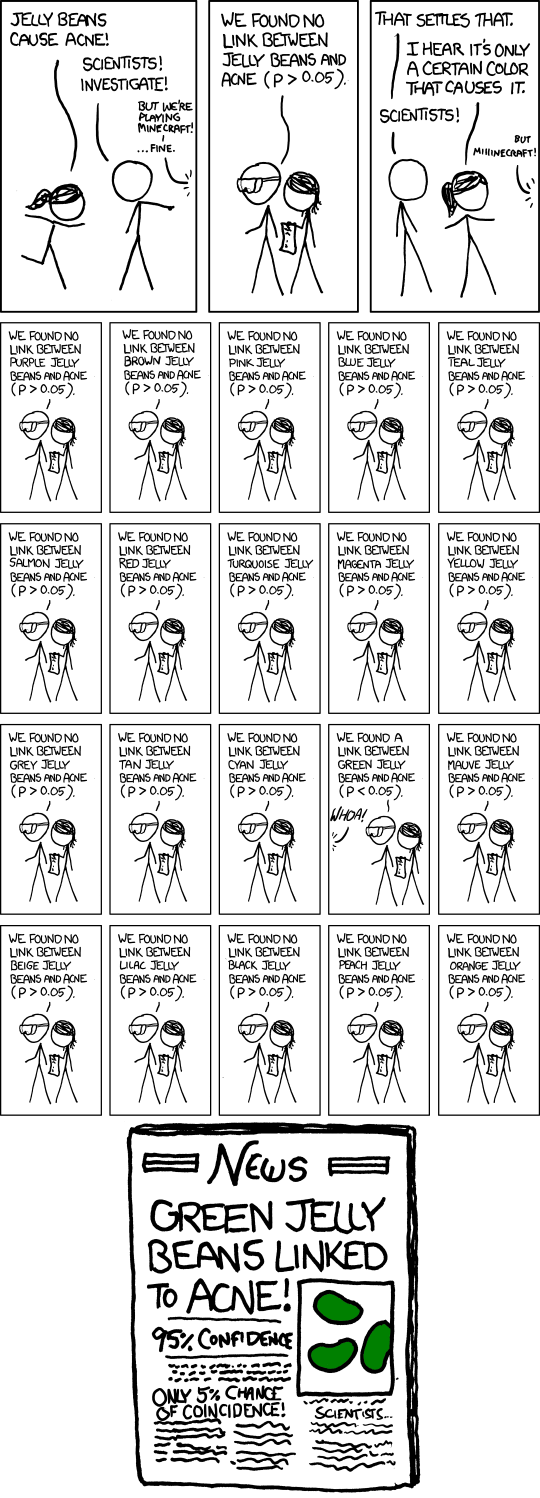

They presented this data at the conference. Well, this data went against common wisdom, too, and many of the session participants challenged the data. Not because it was collected badly, it wasn’t, but because they wanted it to say something else. It was the conference session equivalent of data dredging or p-hacking.

Overall, the data collected in any test from a simple marketing A/B testing through to a phase III clinical trial, is the answer to the question you asked. But just having the data doesn’t always make the next step clear. Sometimes the question you asked isn’t what you tested. This doesn’t mean you can retroactively find signal in the noise.

Mailchimp’s research shows that A/B testing for open rates doesn’t have any affect on revenue. If your final goal is to know which copy or subject line makes more revenue, then you need to test for revenue. No amount of arguing is going to change that data.