Are seed lists still relevant?

- laura

- February 16, 2017

- Best practices

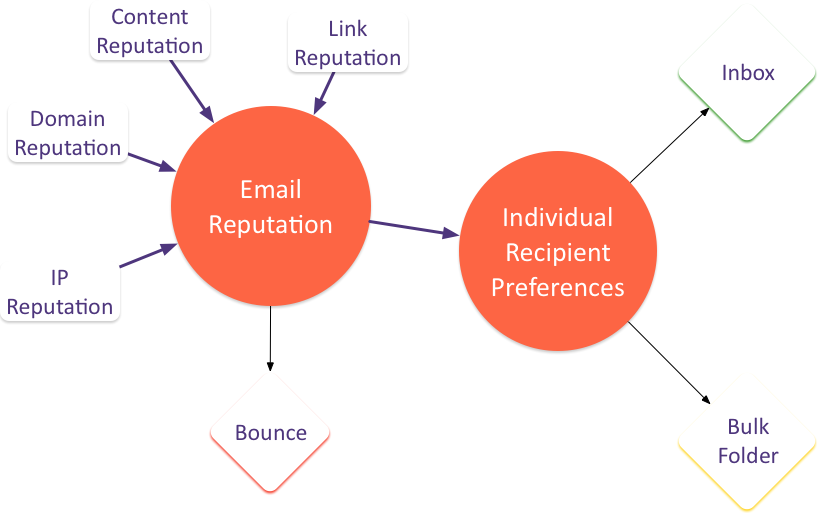

Those of you who have seen some of my talks have seen this model of email delivery before. The concept is that there are a host of factors that contribute to the reputation of a particular email, but that at many ISPs the email reputation is only one factor in email delivery. Recipient preferences drive whether an email ends up in the bulk folder or the inbox.

The individual recipient preferences can be explicit or implicit. Users who add a sender to their address book, or block a sender, or create a specific filter for an email are stating an explicit preference. Additionally, ISPs monitor some user behavior to determine how wanted an email is. A recipient who moves an email from the bulk folder to the inbox is stating a preference. A person who hits “this-is-spam” is stating a preference. Other actions are also measured to give a user specific reputation for a mail.

Seed accounts aren’t like normal accounts. They don’t send mail ever. They only download it. They don’t ever dig anything out of the junk folder, they never hit this is spam. They are different than a user account – and ISPs can track this.

This tells us we have to take inbox monitoring tools with a grain of salt. I believe, though, they’re still valuable tools in the deliverability arsenal. The best use of these tools is monitoring for changes. If seed lists show less than 100% inbox, but response rates are good, then it’s unlikely the seed boxes are correctly reporting delivery to actual recipients. But if seed lists show 100% inbox and then change and go down, then that’s the time to start looking harder at the overall program.

The other time seed lists are useful is when troubleshooting delivery. It’s nice to be able to see if changes are making a difference in delivery. Again, the results aren’t 100% accurate but they are the best we have right now.