Alice and Bob Sign Messages

Alice and Bob can send messages privately via a nosy postman, but how does Bob know that a message he receives is really from Alice, rather than from the postman pretending to be Alice?

If they’re using symmetric-key encryption, and Bob is sure that he was talking to Alice when they exchanged keys, then he already knows that the mail is from Alice – as only he and Alice have the keys that are used to encrypt and decrypt messages, so if Bob can decrypt the message, he knows that either he or Alice encrypted it. But that’s not always possible, especially if Alice and Bob haven’t met.

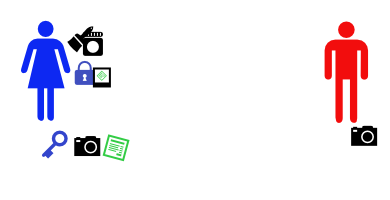

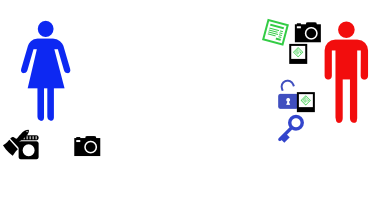

Alice’s shopping list is longer for signing messages than for encrypting them (and the cryptography to real world metaphors more strained). She buys some identical keys, and matching padlocks, some glue and a camera. The camera isn’t a great camera – funhouse mirror lens, bad instagram filters, 1970s era polaroid film – so if you take a photo of a message you can’t read the message from the photo. Bob also buys an identical camera.

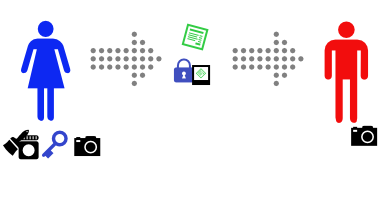

Alice takes a photo of the message.

Then Alice glues the photo to one of her padlocks.

Alice sends the message, and the padlock-glued-to-photo to Bob.

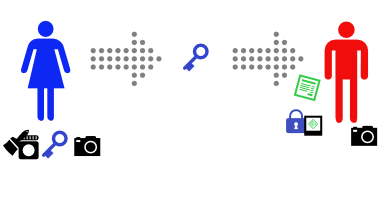

Bob sees that the message claims to come from Alice, so he asks Alice for her key.

(If you’re paying attention, you’ll see a problem with this step…)

Bob uses Alice’s key to open the padlock. It opens (and, to keep things simple, breaks).

Bob then takes a photo of the message with his camera, and compares it with the one glued to the padlock. It’s identical.

Because Alice’s key opens the padlock, Bob knows that the padlock came from Alice. Because the photo is attached to the padlock, he knows that the attached photo came from Alice. And because the photo Bob took of the message is identical to the attached photo, Bob knows that the message came from Alice.

This is how real world public-key authentication is often done.

- Alice generates a key pair, consisting of her public key (blue key) and private key (not shown here, but it’s how she acquires the blue padlock)

- Alice takes a cryptographic hash of the message she wants to sign (the photo) using a commonly known hash algorithm (the funhouse cameras that both alice and bob have)

- Alice encrypts the cryptographic hash with her private key, creating a signature for the message (the padlock with attached photo)

- Alice sends the message and the signature to Bob (they can be sent separately, but the signature is almost always attached to the message to create a signed message)

- Bob fetches Alice’s public key (the blue key)

- Bob takes a cryptographic hash (photo) with the same algorithm that Alice used (identical funhouse camera)

- Bob validates the signature against the hash (unlocks the blue padlock) with Alice’s public key

Some things to note:

- The message was publicly visible – there was nothing to stop the postman reading it. You could use encryption to protect it, but that’s a separate process – encryption and signing are orthogonal. You can encrypt, or you can sign, or you can do both.

- We inverted what the keys and padlocks mean compared to the last Alice and Bob story. Today the key is Alice’s public key, last time it was Bob’s private key.

- The key pair for signing messages is generated in exactly the same way as the one used for encrypting messages. It’s very common to use the same key pair for both signing and encryption. When you send a signed, encrypted message to someone else you sign it with your private key and encrypt it with their public key. When your recipient receives the message they decrypt it with their private key and validate the signature with your public key.

How does DKIM use this to sign messages?

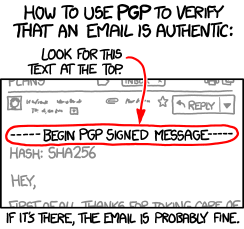

The algorithm (funhouse camera) that’s used to generate the hash is defined in the DKIM-Signature header by the c= and a= headers (there are several steps, but the final one is the cryptographic hash proper, typically SHA256). The hash (photo) is stored in the bh= field of the DKIM-Signature header, and the signature itself (padlock-with-photo) is stored in the b= field. The recipient uses the d= header to find out who signed the message, and retrieves the signers public key (blue key) via a DNS lookup. Then they use that public key to validate via RSA the signature (unlock the padlock). They then normalize and hash the message in the same way as the sender did, and confirm that the hashes match.

There’s a more detailed description at dkimcore.org and the formal specification is in [rfc 4871].

About that problem …

There’s a huge hole in the process as described. When Bob retrieves Alice’s public key, how does he know it wasn’t tampered with en-route? If an attacker, Mallory, has access to the communication channel he can send a message claiming to be from Alice but signed with Mallory’s private key. When Bob requests Alice’s public key Mallory can intercept that too, and replace it with Mallory’s public key. That signature will validate, and Bob will believe that the imposter message he received from Mallory really came from Alice.

There are several solutions to that. The simplest is to rely on a supposedly more secure channel to retrieve a public key with. That’s the approach DKIM uses to sign messages, relying on the integrity of the domain name system to publish the senders public key. That’s really not terribly secure, as there are lots of ways to compromise DNS. But it’s simple and it’s “good enough” for DKIM’s purpose – being able to bypass some spam or phishing filters by masquerading as someone else isn’t a particularly valuable target. That’ll get more secure as DNSSEC support is deployed more widely. (If your DNS isn’t protected by DNSSEC you should probably put it on your medium-term list of things to consider.)

Another approach is to use a web or hierarchy of trust. But that, and certificates, is another article.