Bounces, complaints and metrics

- laura

- July 31, 2012

- Best practices

In the email delivery space there are a lot of numbers we talk about including bounce rates, complaint rates, acceptance rates and inbox delivery rates. These are all good numbers to tell us about a particular campaign or mailing list. Usually these metrics all track together. Low bounce rates and low complaint rates correlate with high delivery rates and high inbox placement.

Why does this happen?

There are a number of different reasons that mail with low complaint and bounce rates will have low inbox delivery rates. Some of them are signs of improper behaviour on the part of the sender, some of them are simply the consequence of how mail is currently filtered. Whatever the reason it can cause confusion on the part of a lot of senders. To many people having low complaint rates and low bounce rates means good inbox delivery.

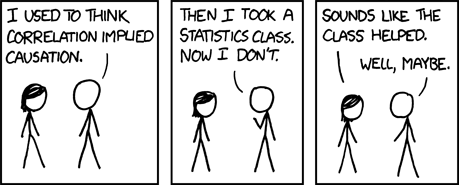

It’s probably partially the fault of delivery experts that so many people have such tight mental connections between low complaints and bounces and inbox delivery. When approached with delivery problems by customers and clients, many of us will look at complaint and bounce rates and advise that both complaint rates and bounce rates should be lower. As we work with clients to lower rates, then their inbox delivery often improves. So, clearly, low bounce and complaint rates mean higher inbox rates.

The problem is, though, that complaint rates and bounce rates are proxy measurements. They’re used to measure how much a mail is wanted by recipients and how clean the mailing list is. A list with high complaints and bounces is usually a list that doesn’t have much permission associated with it. Recipients generally don’t want mail that mail from that sender.

It’s important to remember, though, that complaints and bounce rates don’t specifically measure how wanted a particular mail is. The reason we focus on them is that they are easy to measure and they are correlated with how wanted an email is. We can use them to give us information. Delivery experts use that information to craft solutions to delivery problems. But we’re not actually fixing complaint rates and we’re not actually fixing bounce rates. Often the things I tell clients don’t directly lower complaint rates and they don’t directly lower bounce rates. Instead, I focus on fixing the policies and processes that are causing poor delivery. As those things get cleaned up, the complaint rates and bounce rates decrease and inbox rates increase.

It’s very possible to have low complaint rates and low bounce rates without having a healthy list. That’s often what’s going on when senders have “great stats” and “zero complaints” but are still seeing poor inbox rates. These senders will focus on getting the great stats, because they think that it’s the great stats that lead to the good inbox rate. But they have it backwards. It’s that good list management, hygiene and engagement lead to good inbox rates. One way to measure list management and hygiene and engagement is to measure complaint and bounce rates.

Not every list with good stats has those goods stats because of good list management, hygiene and engagement. These rates are fairly easy to manipulate and some senders spend a lot of money and time manipulating their stats. Manipulating delivery stats did result in better inbox delivery, a bit, which is why so many companies spent so much time doing it. ISPs are adapting, though, and this is why we’re seeing senders with “great stats” have poor inbox delivery.